MongoDB Replication

Table of contents

What is MongoDB Replication?

In simple terms, MongoDB replication is the process of creating a copy of the same data set in more than one MongoDB server. This can be achieved by using a Replica Set. A replica set is a group of MongoDB instances that maintain the same data set and pertain to any Mongod process.

Replication enables database administrators to provide:

Automated copying of data

Data redundancy / Data duplicity

High availability of data

Increased read capacity

Additionally, replication can also be used as a part of load balancing, where read and write operations can be distributed across all the instances depending on the use case.

How MongoDB replication works

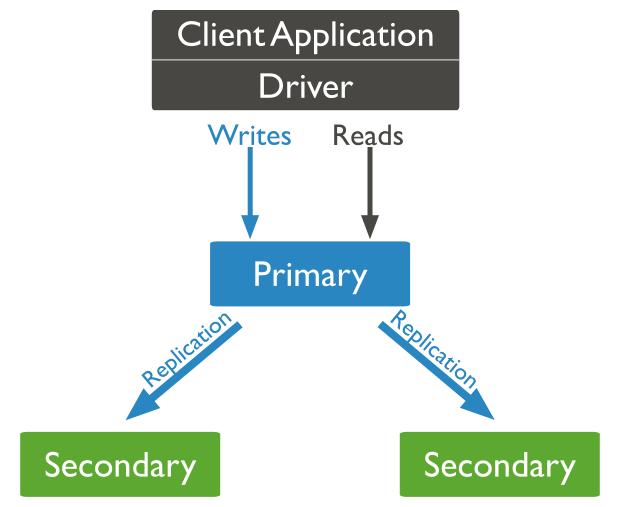

MongoDB handles replication through a Replica Set, which consists of multiple MongoDB nodes that are grouped as a unit.

A Replica Set requires a minimum of three MongoDB nodes:

One of the nodes will be considered the primary node that receives all the write operations.

The others are considered secondary nodes. These secondary nodes will replicate the data from the primary node.

While the primary node is the only instance that accepts write operations, any other node within a replica set can accept read operations. These can be configured through a supported MongoDB client.

In an event where the primary node is unavailable or inoperable, a secondary node will take the primary node’s role to provide continuous availability of data. In such a case, the primary node selection is made through a process called Replica Set Elections, where the most suitable secondary node is selected as the new primary node.

The Heartbeat process

Heartbeat is the process that identifies the current status of a MongoDB node in a replica set. There, the replica set nodes send pings to each other every two seconds (hence the name). If any node doesn’t ping back within 10 seconds, the other nodes in the replica set mark it as inaccessible.

This functionality is vital for the automatic failover process where the primary node is unreachable and the secondary nodes do not receive a heartbeat from it within the allocated time frame. Then, MongoDB will automatically assign a secondary server to act as the primary server.

Replica set elections

The elections in replica sets are used to determine which MongoDB node should become the primary node. These elections can occur in the following instances:

Loss of connectivity to the primary node (detected by heartbeats)

Initializing a replica set

Adding a new node to an existing replica set

Maintenance of a Replica set using stepDown or rs.reconfig methods

In the process of an election, first, one of the nodes will raise a flag requesting an election, and all the other nodes will vote to elect that node as the primary node. The average time for an election process to complete is 12 seconds, assuming that replica configuration settings are in their default values. A major factor that may affect the time for an election to complete is the network latency, and it can cause delays in getting your replica set back to operation with the new primary node.

The replica set cannot process any write operations until the election is completed. However, read operations can be served if read queries are configured to be processed on secondary nodes.

Oplog(Operations Log)

The oplog (operations log) is a special capped collection that keeps a rolling record of all operations that modify the data stored in your databases. Unlike other capped collections, the oplog can grow past its configured size limit to avoid deleting the majority commit point.

How does Oplog work in MongoDB?

MongoDB Oplog happens to be a special collection that keeps a record of all the operations that modify the data stored in the database. The Oplog in MongoDB can be created after starting a Replica Set member. The process is carried out for the first time with a default size.

Oplog is stored in the local DB as an oplog.rs collection.

Priority

- Helps to decide if a node can be elected as the primary

The priority settings of replica set members affect both the timing and the outcome of elections for the primary. Higher-priority members are more likely to call elections and are more likely to win. Use this setting to ensure that some members are more likely to become primary and that others can never become primary.

The value of the member's priority setting determines the member's priority in elections. The higher the number, the higher the priority.

A priority 0 member

Cannot become a primary and cannot trigger elections.

Can acknowledge writing operations issue with write concern of w, provided members have voting rights.

Maintain a copy of data sets, accept read operations and vote in elections.

Use case

Nodes are not required to become primary in remote data centers.

To Serve local read requests.

Hidden Replica Set Members

A hidden member maintains a copy of the primary's data set but is invisible to client applications. Hidden members are good for workloads with different usage patterns from the other members in the replica set. Hidden members must always be priority 0 members and so cannot become primary. The db.hello() method does not display hidden members. Hidden members, however, may vote in elections.

In the following five-member replica set, all four secondary members have copies of the primary's data set, but one of the secondary members is hidden.

Use Case

These members receive no traffic other than basic replication.

Use hidden members for dedicated tasks such as reporting and backups.

Delayed Replica Set Members

Delayed members contain copies of a replica set's data set. However, a delayed member's data set reflects an earlier, or delayed, state of the set. For example, if the current time is 09:52 and a member has a delay of an hour, the delayed member has no operation more recent than 08:52.

Because delayed members are a "rolling backup" or a running "historical" snapshot of the data set, they may help you recover from various kinds of human error.

Considerations-

Must be priority 0 members. Set the priority to 0 to prevent a delayed member from becoming primary.

Must be hidden members. Always prevent applications from seeing and querying delayed members.

must be smaller than the capacity of the oplog. For more information on Oplog size, see Oplog Size.

must be equal to or greater than your expected maintenance window durations.

Replica Set Arbiter

In some circumstances (such as when you have a primary and a secondary, but cost constraints prohibit adding another secondary), you may choose to add an arbiter to your replica set. An arbiter participates in elections for primary but an arbiter does not have a copy of the data set and cannot become a primary.

An arbiter has exactly 1 election vote. By default an arbiter has priority 0.

will always be an Arbiter

maybe required due to cost constraints

doesn't hold the data

participate in an election

default priority is 0

exactly 1 election vote

For example, in the following replica set with 2 data-bearing members (the primary and a secondary), an arbiter allows the set to have an odd number of votes to break a tie:

Automatic Failover

When a primary does not communicate with the other members of the set for more than the configured electionTimeoutMillis period (10 seconds by default), an eligible secondary calls for an election to nominate itself as the new primary. The cluster attempts to complete the election of a new primary and resume normal operations.

Replica Set Data Synchronization

To maintain up-to-date copies of the shared data set, secondary members of a replica set sync or replicate data from other members. MongoDB uses two forms of data synchronization: initial sync to populate new members with the full data set, and replication to apply ongoing changes to the entire data set.

Initial Sync

Initial sync copies all the data from one member of the replica set to another member.

Populate new members with the full data set.

Clones all databases except the local database.

Replication

Apply ongoing changes to the entire data set.

Secondary members replicate data continuously after the initial sync.

Secondary members copy the oplog from their sync from the source and apply these operations in an asynchronous process.

Replica Set Deployment Architectures

The architecture of a replica set affects the set's capacity and capability.

Strategies

Determine the number of members

a) A replica set can have up to 50 members, but only 7 voting members.

b) If the replica set already has 7 voting members, additional members must be non-voting members.

c) Deploy an odd number of number members

Use Hidden and Delayed Members for Dedicated Functions

Add hidden or delayed members to support dedicated functions, such as backup or reporting.

Add Capacity Ahead of Demand

The existing members of a replica set must have spare capacity to support adding a new member. Always add new members before the current demand saturates the capacity of the set.

Distribute Members Geographically

To protect your data in case of a data center failure, keep at least one member in an alternate data center. If possible, use an odd number of data centers, and choose a distribution of members that maximizes the likelihood that even with a loss of a data center, the remaining replica set members can form a majority or at minimum, provide a copy of your data.

Use Journaling to Protect Against Power Failures

MongoDB enables journaling by default. Journaling protects against data loss in the event of service interruptions, such as power failures and unexpected reboots.

Deployment Patterns

Option 1: A replica set with three members that store data has:

One primary.

Two secondary members. Both secondaries can become the primary in an election.

Option 2: Two members storing data

One primary

One secondary member - can become primary in the election.

One Arbiter(only votes in the election)